Interferometry is a powerful measurement technique based on the fundamental principle of wave interference. The effect arises when two or more coherent waves—which are waves that have the same frequency and a fixed phase relationship—are combined. The resulting intensity pattern recorded by a detector is determined by the phase difference between these waves.

- Waves that are perfectly in phase (e.g., their crests align) undergo constructive interference, reinforcing each other to produce a maximum intensity (a bright fringe).

- Waves that are completely out of phase (e.g., the crest of one aligns with the trough of the other) undergo destructive interference, canceling each other to produce a minimum intensity (a dark fringe).

- Waves with an intermediate phase relationship will produce an intermediate intensity, which can be precisely measured to determine their relative phase difference.

In optical applications, this principle is used to split a single coherent beam (often from a laser ) into multiple paths. By recombining these beams, the resulting interference pattern reveals minute differences in the optical path length they traveled, enabling exceptionally precise measurements of distance, surface topography, and refractive index changes.

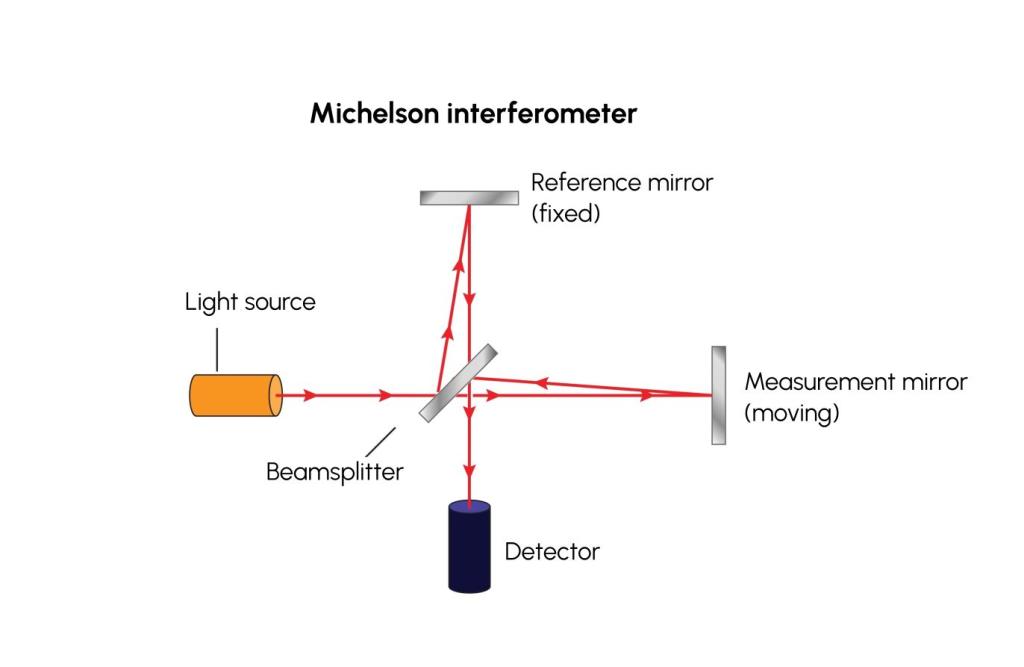

A classic implementation is the Michelson interferometer. This device uses a beamsplitter to divide an incoming light beam into two perpendicular paths: a fixed reference arm and a measurement arm. After reflecting off mirrors at the end of each arm, the two beams return to the beamsplitter, where they are recombined and directed to a detector. If the mirror in the measurement arm moves, or if the refractive index in that path changes, it alters the optical path length. This change causes a shift in the interference fringes, which can be precisely counted to quantify the change with sub-wavelength accuracy.